Survey programming

Summary

This resource covers best practices for programming a survey using computer assisted personal interview (CAPI) software. We primarily rely on examples using SurveyCTO, which is widely used by J-PAL and Innovations for Poverty Action (IPA), but the practices listed below apply to all CAPI software. Resources on coding in SurveyCTO provided by SurveyCTO itself as well as J-PAL and IPA are also located at the end of this resource. The resource is restricted to the actual programming of the survey; please see the relevant resources on measurement and survey design for information on survey content.

Essentials

-

Survey programming is typically broken into three stages: planning, programming, and testing. In practice these stages are not distinct; programming a survey is often an iterative process.

-

Use your survey platform’s tools (e.g., HTML formatting, note fields, and constraints) to help surveyors administer the survey.

-

Standardize variable names and values, both within and across surveys, to reduce the time spent cleaning data.

-

Use relevance constraints to skip questions that do not apply or by pre-populating fields for which you already have data rather than repeating questions in order to minimize the burden on respondents.

-

Plan time to test the form in order to identify and correct programming errors before the survey launches.

Survey programming cycle

Designing a survey starts with developing a set of questions. These questions along with their associated logic are then programmed into CAPI software. The questions and modules will undergo various stages of review. Changes can (and will) be made at the design, programming, testing, and implementation phases. It is important to plan for this. You should be prepared to log changes to survey questions and survey code. In the initial programming phase, it’s a good idea to implement a “code-freeze,” described below, to allow time to program a stable version of the survey. Subsequently, it is a good idea to block designated time to incorporate modifications and to update your log of changes.

Planning

As programming a survey is an iterative process, you will most likely make changes to the survey after it goes to the field in order to fix programming issues, add or delete questions, change wording, or add notes on use for unclear questions. These modifications to the survey also need to be designed, programmed, and tested so you should develop a plan for this. The plan can be something as simple as “Before we launch an updated version of the survey, we will budget three days to program and test the changes and one day to train enumerators on the changes.” Having a plan in place before the survey launches means that you won’t have to create and implement a plan for addressing changes while the survey is live. Planning the process can also reduce the number of iterations required to finalize the survey, which can save time and money. Before programming, it is useful to consider the following:

Version control

You will most likely have two versions of the instrument: a Word document and the file used by the survey platform (in SurveyCTO, for example, this is a Google Sheets/Excel spreadsheet). Both files need to be updated when the survey instrument changes. The version control system should be the same across the two documents, and it should be clear which versions correspond to each other. An easy solution is to name both files “Survey_Name_V##.file extension”, or similarly “Survey_Name_Date.file extension”.

-

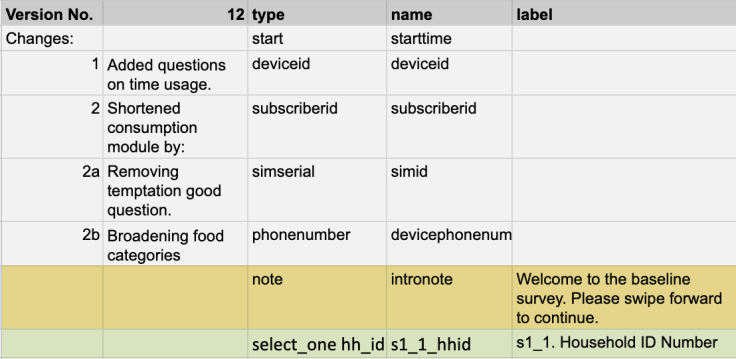

In addition to simple version control, you should document what changed and why. If using SurveyCTO, one option is to add a column to the SurveyCTO xlsx form (see image below). This column can be used to record the form version number and notes changes.1

-

Version control is particularly important if multiple people will program the survey. Some platforms allow you to program the survey in Google sheets, which eliminates some of these concerns. If you cannot use Google Sheets, develop a plan for how multiple people can work on the survey without overwriting each other's work or creating conflicting copies. This can be as simple as “Person X programs the form from 8:00 AM until noon, and person Y translates person X’s work from noon until 4:00 PM.”

Code freezes

A “code freeze” is a point at which the field staff and PIs collectively agree that no further additions will be made to the current survey version before going to the field. This ensures that a version of the survey is thoroughly tested before proceeding with further modifications. It allows time to catch bugs and limits the possibility of introducing mistakes into the survey through last-minute additions. This is important for two reasons:

-

There will likely be bugs in the survey (this is normal and should not be a problem if sufficient time is left for code review). A code freeze blocks time to fix survey bugs before launching the survey.

-

During training or piloting, the field team will likely have useful feedback on question phrasing, section order, ambiguity, exceptions, etc., and a code freeze will give you time to incorporate these changes.

Programming

Your ultimate goal in programming is to make the survey as clear as possible for three groups: surveyors, respondents, and the individuals who will be using the data.

Programming for surveyors

Consider what can make surveyors’ jobs difficult and how you can make them easier. Some aspects of survey programming reduce the possibility of human error, such as constraining fields to take certain values or showing questions only where appropriate. Others focus on reducing variation in how questions are asked by formatting text or providing hints.

Both aspects of programming for surveyors work to get higher quality data and include:

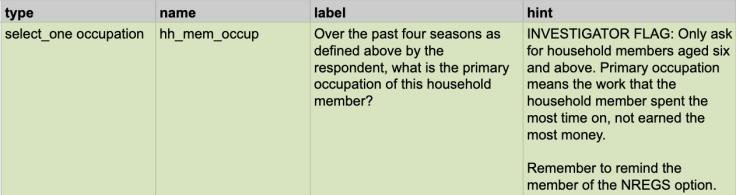

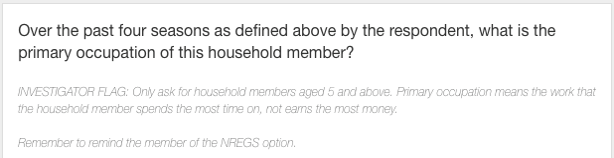

- Use hints to the surveyors. SurveyCTO allows you to add hints to questions, which appear in italics:

Is shown as:

- Use formatted text to prompt surveyors. SurveyCTO allows for HTML formatting, meaning you can bold text as well as change the font, size, color, and position of the text. Use this formatting as a way of standardizing surveyors’ interviewing processes; for example, if a multiple-choice question is bolded, it means surveyors need to read all options out loud to the respondent before soliciting an answer. Formatting can also help surveyors as they read the questions aloud, such as by reducing the chance they lose their place.

-

Limit the amount of text on one page and consider screen size, especially if the enumerator has to read it to the respondent. While programming, preview the survey on the device on which it will be administered to ensure the amount of text is appropriate.

-

Add question numbers in the text or label to make it easier for surveyors to remember which questions gave them trouble or where they notice mistakes.

-

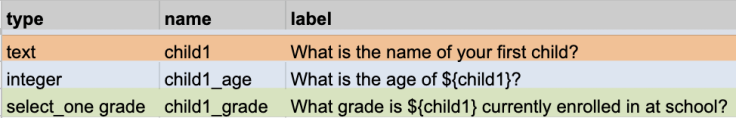

When asking follow-up questions, keep track of multiple topics by referencing answers to a previous question. At times you may want to ask follow-up questions based on a respondent’s answer to an earlier question (e.g. the age of a particular child). Pulling a previous answer into the text of a follow-up question can help enumerators keep track of who or what the follow-up question is referring to.

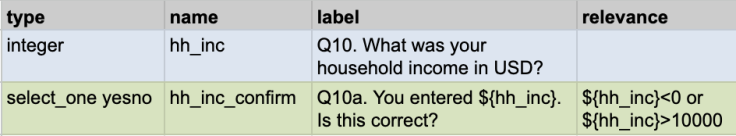

- Use both hard and soft constraints to verify responses, where useful. Soft constraints add additional questions to the survey when a response is odd: “You said your household income was $100. Is this correct?” Soft constraints are useful for responses that are unusual but not impossible (e.g., household income significantly above or below some value, crop yields that are unreasonable, etc.). Sample code is as a follows:

The surveyor will double-check the respondent’s response if it falls into a certain range (in this example, an income less than zero or higher than 10,000) and then proceed to the next question. Surveyors will need to be trained to go back and fix the hh_inc response if they select no to the confirmation question (you might also consider constraining the follow-up response to only take “yes”, forcing them to fix the income response if it was wrong).

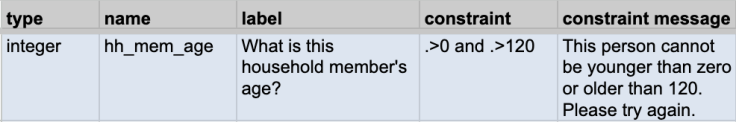

- Hard constraints are coded within a particular question and prevent the survey from advancing until a new value is entered. They are useful to prevent responses that are impossible or extremely unlikely (age less than zero, household size above 100, etc.). See the example code below.

Here, the survey will only allow the form to proceed if the age is greater than zero or less than 120. If the answer is outside of that range, the form will display the constraint message (“This person...please try again”).

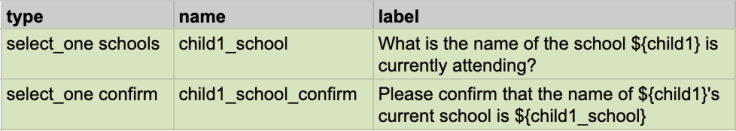

- Add a confirmation question for answers that cannot be constrained, but are important to record correctly (e.g. the name of a child’s school for an experiment clustered at the school level). Before the surveyor can proceed to the next question in the survey, they must confirm that the answer entered in the previous question was correct.

- Resist the temptation to “over-engineer” your surveys by adding in too many constraints. It is easy to introduce constraints that hamper data collection. For example, suppose you have a hard constraint that says family size must be larger than one, but some respondents live alone–in this case, the form would not proceed, even though a family size of one might be valid.

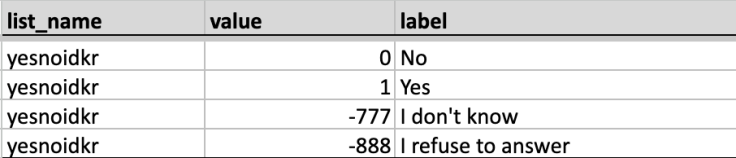

- Make questions required where necessary but provide outs. Required questions must be answered in order to proceed with the survey. It might be tempting to make every question required, but this is inflexible: there could be reasons why this question does not apply to the respondent, and now either they or the surveyor will have to make something up. Give respondents the chance to give answers like “I don’t know/I refuse to answer/Not applicable,” and keep a close eye on these questions during your high-frequency checks to get a sense of how often respondents don’t answer questions. You can code this into your survey by adding “I don’t know,” “I refuse to answer,” or “Not applicable” options to your choice selections. For text and numerical entries you can consider allowing surveyors to enter values such as “-777” to mean “I don’t know” or “-888” to mean “I refuse to answer.”

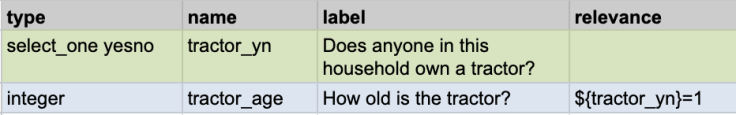

- Relatedly, use logic and skip patterns. For example, only ask a respondent if their children are in school if they have children. In SurveyCTO, this concept is called “relevance.” If too many people are answering “I don’t know” or “Not applicable,” as described above, you may need to add a relevance to those questions to make sure they are only shown where applicable. In the below example, the follow-up question “How old is the tractor?” is only shown to respondents who answered “yes” to “Does anyone in your household own a tractor?” (${tractor_yn}=1).

- As part of surveyor training, a detailed surveyor manual should accompany the questionnaire. The surveyor manual should include all the details needed for surveyors to understand and ask questions in the same way (e.g., if you have formatted text to appear in certain ways, like bold text to indicate the surveyor should read all answers to the question before soliciting a response, explain what that formatting indicates). Furthermore, you should explain to surveyors the logic, constraints, and skip patterns in the programmed survey, so it can be useful to write the surveyor manual in tandem with programming the survey. SurveyCTO can generate a Word document of your survey which includes the relevances and constraints; this can be used as a starting point for creating a surveyor manual.

Programming for respondents

Your goal is to create a survey which minimizes the respondent’s burden and time spent answering it. In addition to ethical considerations, there is also evidence that longer surveys might not generate higher quality data than shorter surveys (Kilic & Sohnesen 2015).

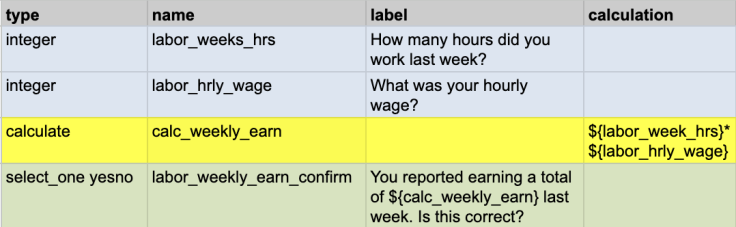

- Minimize the amount that respondents have to calculate themselves. Suppose you are recording a respondent’s weekly hours worked and hourly wage. To lessen the cognitive load on the respondent, you can calculate and show them their total weekly earnings, rather than ask them to do the mental math (see example below). SurveyCTO calls this a “calculation” (example code below).

- Display questions in a logical way. Many survey platforms have options for how questions and groups of questions are displayed. For instance, you can build a household roster while displaying the details for each family member by specifying “table” in the group’s appearance column (see SurveyCTO’s guide to group appearances). This can help respondents recall which family members they have discussed, and give more accurate information.

- Use the information you have already learned about the respondent, when available. After your initial data collection (such as baseline or listing exercise), you will have some information on respondents. Most survey platforms allow you to pull this information into the survey and incorporate it into questions. In SurveyCTO this involves attaching a .csv file of baseline information to your survey and using a special calculate field called a pulldata–see the SurveyCTO pre-load documentation for more information. This information can be used to shorten the length of the survey: asking the respondent, “Last time we visited, you owned five plots and grew these six crops. Is this correct?” takes much less time than going plot by plot and crop by crop to generate the same information.

Programming for data analysts

Oftentimes the person programming the survey will be the one analyzing the data. Whether or not this is the case, there are a few things you can do while programming to minimize the amount of cleaning required later on.

- Use self-explanatory variable names so that it is easy to find variables when doing analysis. For example, use hh_tot_income rather than q25inc, or label variables with PII as pii_varname for easy removal. You should however avoid making variable names too long which can become unwieldy during data analysis and risks incompatibility with some data analysis softwares (e.g. Stata’s variable name limit is 32 characters).

- “other, specify” categories for multiple-choice questions should all be named in a similar way (e.g., rootvar_oth).

- If the same question is asked in multiple surveys or rounds of data collection, it is advisable to use the same name so that it's easy to compare answers and calculate changes.

- Questions from the same section should be named with a common prefix/suffix. For example, questions relating to the household can have the prefix “hh_”.

- Use conventions for value labeling or naming for answers that are common to multiple questions. For example, -777 = “Don’t know”, while -888= “Prefer not to answer.”

- Keeping these values consistent throughout the survey will make it easier to identify and clean different types of missing data. Note that this will require additional programming–you want enumerators to be able to type -777 for text input questions, for instance, which may not normally be permitted in your survey platform of choice.

- These values should also be the same across rounds: If employed=1 and unemployed=0 in the baseline, then you should use these values in subsequent survey rounds.

- In general, when asking categorical questions with two responses, assign 1 to the response which affirms the question (e.g., if asking “What is your unemployment status?” let 1 = Unemployed and 0 = Employed, while if asking “What is your employment status?” let 1 = Employed and 0 = Unemployed). This style of coding binary questions makes the variable more intuitive to interpret–for example, the mean of the variables is the percentage of respondents who affirmed the question.

- Add select lists to standardize answers whenever possible but include an “other” option to catch answers that may not be included in the list. For questions where answers can be predetermined (e.g. types of crops typically grown in a region, names of villages in a sample district), standardizing answers through a select list can avoid many data entry errors and simplify data cleaning and analysis downstream. This may be particularly important for questions that could affect the outcomes of the regression model, such as village or school name in a clustered randomized control trial. You can add some flexibility to this strategy by including an “other” option paired with a follow-up question where surveyors can enter text for answers that do not conform to the select list. You may also consider adding options to the select list if a substantial number of respondents give a particular answer through the “other” option.

- Make it easy to link respondents across rounds of surveys by pre-loading identifiers from the baseline survey or listing exercise into subsequent surveys. This way, the enumerator only needs to double-check that they are interviewing the correct respondent.

Testing

Once the survey is programmed, you should plan between one to three days of testing, depending on how complex the survey is. Sending a survey to the field with errors is costly and wastes respondents’ and surveyors’ time. Testing the form also generates data that can be used to set up data quality checks.

Three groups of people need to test the survey, each focusing on something different:

-

Programmers test: Programmers know how the form should work and so should focus on testing the internal logic of the form (e.g., the skip patterns, constraints, etc.). It can be useful for programmers to test the form with a hard copy of the survey to verify the form’s logic. Programmers are also well suited to detect programming bugs (see the testing checklist below).

-

Outsiders test: Outsiders--or someone uninvolved with programming the form--won’t know how the form should work. They are best suited to trying to break the form and should give illogical or contradictory answers, or speed through the form as quickly as possible. It can be useful to have outsiders test the form with extreme cases (e.g., a family with 30 members, etc.). These extreme cases may be too time-consuming for programmers and surveyors to check but are useful to test in case they are encountered in the field. Outsiders should also use the below checklist while testing the form.

-

Surveyors test: After programmers and outsiders have thoroughly tested the form, surveyors should do so as part of their training. They should focus on translation and interpretation of questions and should test the form on the device they will use to conduct surveys in the field. SurveyCTO also allows surveyors to switch back and forth between multiple languages which can help with translation tests. It may be helpful to write out some scenarios for the surveyors to use to test the form (e.g., the respondent is not available to be interviewed, the respondent wants to leave the survey, a family with 10 members and details about them, or a farmer with six fields, etc.). Note that the surveyor test will come before you more formally test the clarity of the survey content through a questionnaire pilot so surveyors should focus on learning and testing the mechanics of the form over its content (read more on survey testing on our Surveyor hiring and training guide).

Testing checklist

This checklist should be used to ensure the survey programming was done correctly. Note any instances where the survey programming fails (e.g., a question on an individual’s age allows for negative responses). See the survey design checklist and questionnaire piloting checklist, respectively, to determine if the survey questions measure what you want them to measure, or if respondents understand the survey.

- If the question is supposed to be required, make sure it can’t be skipped without answering.

- If the question is supposed to be required, add an out such as “I don’t know/NA.”

- If you advance through a question quickly, make sure it doesn’t cause issues with the form.

- If the question requires text values, try entering numbers and symbols and consider whether those should be allowed. For example, if the question asks for an email “@” should be allowed. Also consider if there should be a maximum or minimum number of characters allowed.

- If the question should have instructions for surveyors, make sure those instructions appear and that they are formatted correctly (i.e., differently from the rest of the question text).

- Constraints: If the question has a constraint, check edge cases and outliers (e.g., if you constrain age to be between 0 and 100, try entering: -1, -1000, 101, 1000). Check whether the constraint blocking the correct responses. Test whether the programmer used “>” when they should have used “<”, or “>=” when they wanted “>”, etc.

- Relevances/skip patterns: If you select the response that should trigger follow-up questions, make sure they appear. Check to see if you can go back and change your response to this question and consider whether you want surveyors to be able to do the same.

- Calculations: Check if calculations are being done correctly. If SurveyCTO cannot perform the calculation, you will see “…” where the calculation should appear. Make sure the calculation is correct by also doing it by hand (e.g., if it multiplies two earlier fields, check this calculation by doing the math yourself).

- Pre-fill data: If you are pre-filling the form with data, make sure it is pulling in the correct data (e.g., is the form pulling in age when you need it to pull in gender, etc.). Like all calculations, if SurveyCTO cannot perform the pre-fill, it will display “...” where the prefill data should appear. If the pre-fill data is used in relevances or constraints, spend extra time checking those (e.g., if you have a section that is only shown to the treatment group, and you pre-fill the form with respondents’ treatment status, make sure this section appears for respondents in the treatment group).

Modifying questions in an ongoing survey

Once a survey has launched and data is being collected, you should make modifications carefully. SurveyCTO can only export data that matches the latest version of the form. This means that you will not be able to access any responses to a question if you update the survey and remove the question. Instead, you can prevent SurveyCTO from displaying the question by typing “yes” in the disabled column for that question. Alternatively, create impossible relevance criteria to the question so that it is never shown to respondents. In any case, you may want to download data prior to implementing any such changes that could make certain fields inaccessible.

Survey platforms

Cost, security features, flexibility, and compatibility are all important aspects of choosing the right software for digital surveying. Below are a few of the commonly used platforms:

| Survey program | Pros | Cons | Resources |

|---|---|---|---|

| SurveyCTO | Good security features. It is possible to customize project roles and access to different people on the team. Customizable surveys (using built-in features and HTML). Allows for offline data collection. | Depending on your team, it can be expensive. However, there is a free version--see "Community subscription"--which can be helpful for design and pre-piloting with very small numbers of respondents. |

SurveyCTO documentation |

| Open Data Kit (ODK) | Very flexible (can use pre-made forms or create your own). Good security features. Open-source and widely used, meaning it is free, gets regular updates, and has extensive help resources online. | Only compatible with Android devices. Requires significant upfront programming, as everything is customizable. | Getting started with ODK Open Data Kit |

| formR | Designed for self-administered, rather than surveyor-administered surveys. Easily integrates with R for quick data analysis and visualization. Can automatically send reminders and invitations via email or text message. |

Requires knowledge of R and JSON to fully utilize. | formR documentation formR Google group formR Github |

| Ona | Can be run on smartphones (both iOS and Android), as well as computers. Has offline data collection capabilities. The Collaborator feature allows multiple people to have differential levels of form editing, downloading, and testing privileges. |

Surveys can only be edited in XLS forms, which may be difficult for beginners. Subscription plans limit the total number of responses (unless the most expensive version is purchased) |

Ona community support forum Ona help center |

| Qualtrics | Sophisticated method for online distribution, with the ability to track respondents' progress in the survey. Surveys can be distributed offline on mobile devices as well. Good security features (Qualtrics is used by many Fortune 100 companies) | Expensive (and opaque pricing model). It does not have a comparative advantage in offline data collection. It requires some time to become familiar with the survey structure. | Learn to use Qualtrics Qualtrics community support forum |

| SurveyBe | Surveys are coded via a drop-and-drag interface (could have a lower fixed cost to learn). It can collect data offline and focuses on easy collaboration to create forms. | Price is around 75 USD per month for the first 10 users. There is no publicly available documentation. | SurveyBe forum SurveyBe FAQs |

| Pendragon | It offers a wide range of extra features: QR code/barcode scanning, advanced GPS features, mobile thermal printing, etc. Forms can be edited on a mobile device. | Somewhat complex price and data storage scheme | Pendragon quick start guide Pendragon field types guide Pendragon advanced functionality guide |

| Blaise | It seems very flexible: supports mixed-mode surveys (e.g., a survey could have parts that are done via phone and computer) and has several CATI features such as an automatic dialer. Supports API integrations. | Opaque pricing model and appears to have a high fixed cost to learn. | Video tutorials Community forum |

| Fieldata | Free if your project shares data. The focus is on monitoring, so it is built to quickly retrieve information. | It only works on Android devices. It does not have publicly available documentation or tutorials. The website does not have information on pricing (if projects want to use Fieldata but can't commit to sharing data) | No documentation is publicly available, but it is based on ODK. |

Last updated May 2023.

These resources are a collaborative effort. If you notice a bug or have a suggestion for additional content, please fill out this form.

We thank Liz Cao, Therese David, Sarah Kopper, Michala Riis-Vestergaard, and James Turitto for helpful comments and review. All remaining errors are our own.

Additional Resources

SurveyCTO documentation

-

SurveyCTO training courses

-

SurveyCTO Support Center (subscribers only)

-

SurveyCTO Community Forum

-

The World Bank's Development Impact Evaluation (DIME) unit's set of resources with advice for coding in SurveyCTO

- Lectures: Beginner, Intermediate, and Advanced

- Exercises: Beginner, Intermediate, and Advanced

References

Kilic, Talip and Thomas Pave Sohnesen. “9 pages or 66 pages? Questionnaire design’s impact on proxy-based poverty measurement.” World Bank Development Impact (blog), March 11, 2015. https://blogs.worldbank.org/impactevaluations/9-pages-or-66-pages-questionnaire-design-s-impact-proxy-based-poverty-measurement. Last accessed July 15, 2020.