Building skills to advance impact evaluation: J-PAL’s Evaluating Social Programs course

This is the first blog in a series illustrating stories of how J-PAL’s training courses have built new policy and research partnerships and strengthened existing ones to advance evidence-informed decision-making. The second showcases how custom courses strengthened government partnerships, the third illustrates how online course participants applied learnings at their organizations, and the fourth shares how training activities within J-PAL's evaluation incubators helped partners engage more deeply with research design.

Since establishing our training group in 2005, J-PAL has delivered hundreds of in-person and online courses to train over 20,000 people around the world on how to generate and use evidence from randomized evaluations. From integrating existing evidence and data into decisions to building research partnerships to conduct impact evaluations, many of our training participants apply learnings to strengthen the culture of evidence use in their organizations.

Growing a community of practice for Evaluating Social Programs

Our flagship course, Evaluating Social Programs, is designed for policymakers, practitioners, and researchers interested in learning how randomized evaluations can help determine whether their programs are achieving their intended impact. Offered in several locations around the world each year, the week-long course combines interactive lectures, real-world case studies, and small group sessions to integrate key concepts with practical applications. Participants build connections with peers, academic researchers, and policy experts and come away from the course with the resources and tools to map out an evaluation strategy for their own programs and policies.

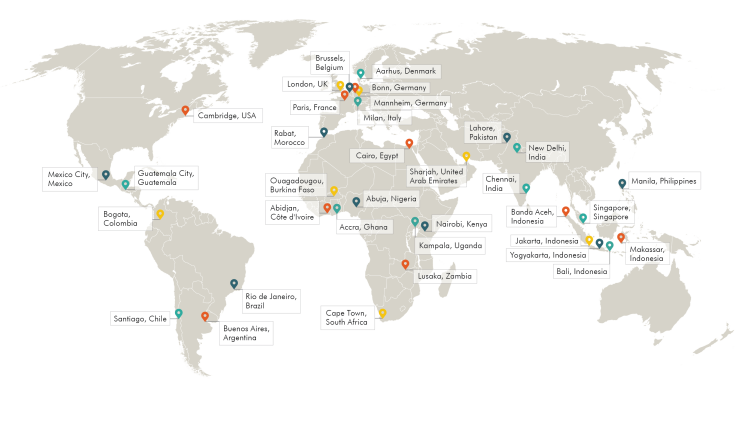

In this blog, we highlight two notable examples of how Evaluating Social Programs courses led to high-impact research and policy partnerships, while recognizing the impressive community of practice among course alumni around the world. First delivered in Cambridge, USA, and Chennai, India in 2005, the course has trained over 2,750 participants through more than 75 offerings in locations ranging from Argentina to Zambia, as well as live Zoom offerings in response to Covid-19. We also have self-paced, online versions of the course in several languages to expand the reach of the course content to learners worldwide.

Forging a partnership for evaluating biometric enrollment in Liberia

J-PAL’s Digital Identification and Finance Initiative (DigiFI Africa) built a partnership with Liberia’s Ministry of Gender, Children, and Social Protection through J-PAL Africa’s virtual Evaluating Social Programs course in August 2021 when Shadrach Saizia Gbokie, a program manager for Liberia's Social Safety Net Project, attended the training and engaged in group work sessions led by a policy manager from DigiFI. After the training, members of our team and the Ministry discussed their work and their interest and capacity to conduct research.

During these conversations, DigiFI connected with the National Coordinator for Social Protection at the Ministry, Aurelius Butler. Since then, DigiFI has worked closely with Aurelius and the Ministry to develop a new randomized evaluation to help answer their questions around how to enroll urban communities in their new household registry using biometric data. Researchers Erika Deserranno, Andrea Guariso, and Andreas Stegmann were matched with this opportunity, and Aurelius and Dackermue Dolo from the Ministry later attended J-PAL Africa’s 2022 Evaluating Social Programs course to dive deeper into the research methodology. One of the questions the evaluation will try to answer is whether biometric identification systems help reduce barriers to accessing social programs and achieve accurate program targeting and efficiency.

The course on Evaluating Social Programs truly presented a space for the Ministry to fully engage with research and understand what we could achieve for the betterment of our citizens. By providing a comprehensive deep dive into research methodology we are now able to better articulate and refine our objectives. The course truly made an immediate impact in our work, as we have already begun using 90% of what we took from the course.

-Aurelius Butler, National Coordinator for Social Protection, Ministry of Gender, Children, and Social Protection, Liberia

Sparking an education technology evaluation in India

An Evaluating Social Programs training in New Delhi in 2015 was instrumental to seeding an evaluation of Mindspark, a personalized adaptive learning platform of the Indian ed tech company, Educational Initiatives (Ei). At the time of the training, Pranav Kothari was Vice President at Ei and was overseeing the development of the Mindspark product as well as deploying the product in Mindspark centers, which sought to bring a Hindi version of the tool to low-income neighborhoods in urban Delhi. He applied to J-PAL South Asia’s training, hosted in partnership with CLEAR South Asia, with a specific goal—to be sure that every child in a Mindspark center is learning—and was keen to undertake a systematic assessment of the program and its implementation to understand whether or not it worked as intended.

The course’s focus on both the theory and practice enabled Pranav to take the first step in designing a potential evaluation of the Mindspark center program. During the training’s small group work sessions, he worked closely with Maya Escueta, former Policy and Training Manager at J-PAL South Asia, to develop a preliminary evaluation plan. Seeing the potential in the program and its evaluation, Maya visited the Mindspark centers, and eventually brought on J-PAL affiliated researchers Karthik Muralidharan, Abhijeet Singh, and Alejandro Ganimian to conduct a full-scale randomized evaluation. Run in close collaboration with Pranav and the Ei team, the evaluation demonstrated that the program increased learning levels across all groups of students. The study has since become one of J-PAL’s landmark studies in education and offers valuable evidence on the use of computer-adapted learning in low-resource settings.

While we had been working on the ground for over two years, we wanted a third-party evaluation to determine whether our intervention was useful or not. This course helped us plan and conduct an RCT, allowing us to identify how well our program worked and where we could refine it. These results have led to newer versions of the program, and have helped us scale Mindspark to reach over 300,000 students, or about 500 times the number of students that were initially studying in the centers. I have also learned many skills which helped Ei in doing impact evaluations of other educational interventions.

-Pranav Kothari, Chief Executive Officer, Educational Initiatives

Training to strengthen evidence-informed decision-making

J-PAL’s Evaluating Social Programs course equips program implementers, policymakers, and researchers with the tools to understand and engage with randomized evaluations to become better producers and users of evidence. This blog shines a light on just a few examples of how past participants through the years have integrated learnings at their organizations to advance evidence-informed decision-making.

Interested in learning how to strengthen impact evaluation at your organization? Explore and apply to one of J-PAL’s upcoming Evaluating Social Programs courses.